Highlights:

- Introduces a layered canvas for precise spatial control in personalized image generation.

- Allows multiple subjects to be placed, resized, and locked independently, similar to professional image-editing tools.

- Features a novel locking mechanism requiring no architectural model changes.

- Developed by Guocheng Gordon Qian and collaborators across computer vision and AI research.

TLDR:

LayerComposer, a new framework for interactive multi-subject text-to-image generation, introduces layered canvas and locking mechanisms that bring Photoshop-like spatial control to AI image synthesis while preserving visual fidelity and identity accuracy.

A research team led by Guocheng Gordon Qian, alongside Ruihang Zhang, Tsai-Shien Chen, Yusuf Dalva, Anujraaj Argo Goyal, Willi Menapace, Ivan Skorokhodov, Meng Dong, Arpit Sahni, Daniil Ostashev, Ju Hu, Sergey Tulyakov, and Kuan-Chieh Jackson Wang, has unveiled a breakthrough in personalized text-to-image (T2I) generation titled *LayerComposer: Interactive Personalized T2I via Spatially-Aware Layered Canvas*. The study, published on arXiv, addresses one of the most persistent limitations in generative AI — the lack of intuitive spatial and compositional control when generating images involving multiple distinct subjects. Existing models, despite their high-fidelity outputs, often struggle to balance visual realism with spatial precision, leading to occlusion or compositional artifacts.

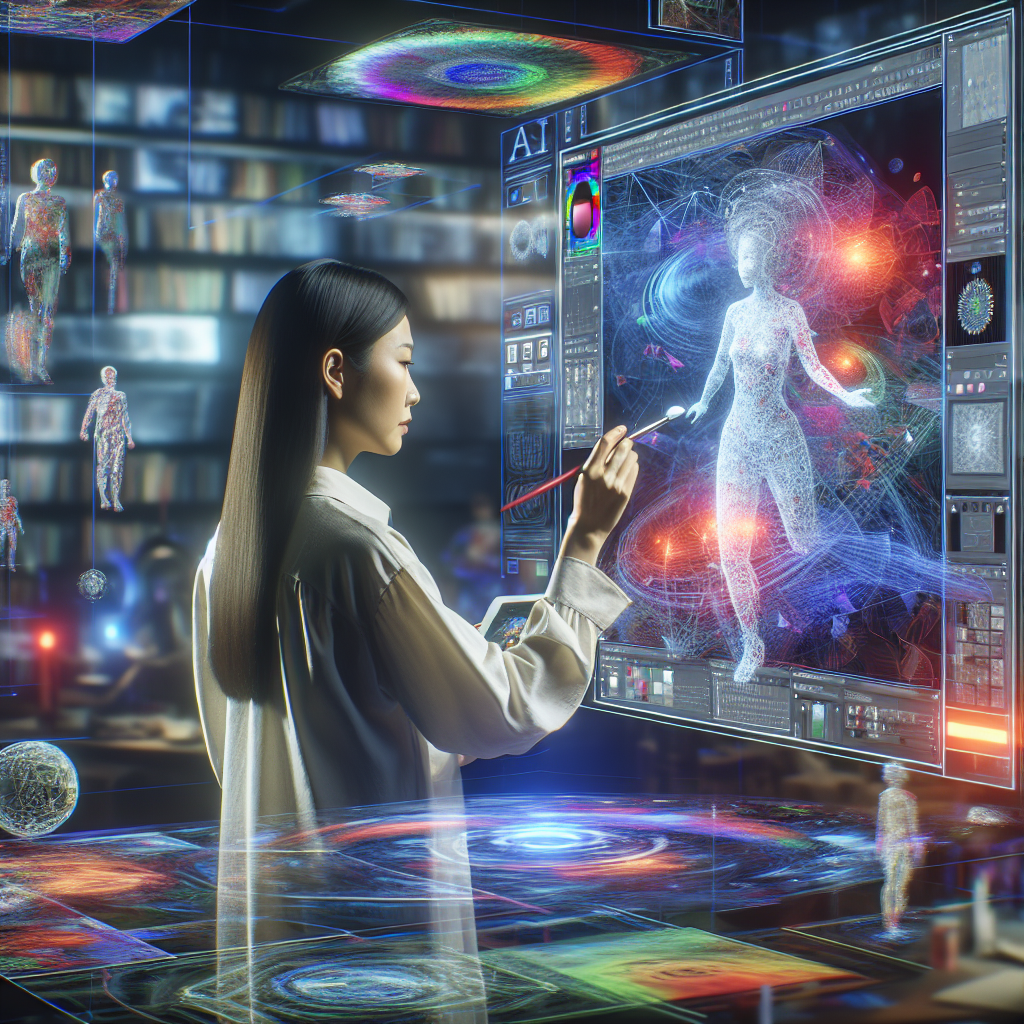

LayerComposer introduces two key innovations that set it apart from traditional diffusion-based T2I models. The first is the *layered canvas*, a conceptual and computational representation where each subject in an image exists on its own layer. This design allows users to move, scale, or rearrange each subject independently, providing a degree of interactivity that mimics professional image-editing workflows such as those found in Adobe Photoshop. The second major contribution is the *locking mechanism* — a system that enables users to preserve selected layers with high visual fidelity while permitting other layers to flexibly adjust to context-aware changes in the scene. Remarkably, this locking process does not require any changes to the core architecture of existing generative models, instead leveraging positional embeddings and a complementary data sampling strategy to achieve seamless integration.

From a technical standpoint, LayerComposer’s layered canvas provides a spatially-aware data structure that aligns positional information with semantic content, effectively bridging the gap between user-driven composition and generative synthesis. The locking mechanism further enhances performance through an adaptive updating process that refines the background or unselected layers based on learned relationships between spatial embeddings. Experimental results demonstrate that LayerComposer delivers superior spatial controllability, subject identity preservation, and multi-subject coherence compared to leading methods in the field. This positions it as a transformative tool for artists, designers, and developers exploring the next generation of interactive image synthesis systems.

The implications of LayerComposer extend beyond individual creativity—it paves the way for collaborative AI-assisted design environments and next-generation content creation platforms. By providing real-time, layer-based editing capabilities within the generative process itself, LayerComposer merges the flexibility of human artistic control with the power of deep learning–based visual synthesis.

Source:

Source:

arXiv:2510.20820 [cs.CV] — ‘LayerComposer: Interactive Personalized T2I via Spatially-Aware Layered Canvas’ by Guocheng Gordon Qian et al., submitted on 23 Oct 2025. DOI: https://doi.org/10.48550/arXiv.2510.20820